Sensor fusion represents a paradigm shift in how IoT systems perceive and understand their environments. Sensor fusion combines data from multiple heterogeneous sensors to create a unified understanding that is dramatically more accurate, reliable, and contextually rich than any individual sensor could provide. This integration doesn’t merely add redundancy—it exploits the complementary strengths and weaknesses of different sensor types to achieve emergent capabilities impossible through traditional approaches.

The principle underlying sensor fusion is elegantly simple yet profoundly powerful: individual sensors are inherently limited—temperature sensors cannot detect motion, accelerometers cannot measure pressure, cameras fail in darkness—but by strategically combining sensors that measure different physical phenomena, systems gain multi-dimensional awareness. A smartphone containing only an accelerometer cannot determine orientation; adding a gyroscope enables stable orientation even during rapid rotation, but gyroscope drift over time makes long-term orientation estimation increasingly inaccurate; adding a magnetometer provides compass calibration correcting gyroscope drift. These three sensors fused together create what gyroscopes cannot achieve alone: stable, accurate, long-term orientation estimation.

This comprehensive analysis explores why sensor fusion is becoming essential for intelligent IoT systems, examining the underlying principles, architectural approaches, algorithms, real-world applications, and implementation challenges.

Understanding Context Awareness Through Sensor Fusion

Context awareness represents the enabling capability that sensor fusion provides to IoT systems. A device with context awareness understands not merely raw data but the situational meaning of that data—the context in which measurements occur. Without context awareness, a wearable device detecting elevated heart rate cannot distinguish between productive exercise and alarming arrhythmia; with context awareness (combining heart rate, accelerometer motion patterns, skin temperature), the device understands whether elevated heart rate represents expected physiological response to activity or an anomalous health event.

Context awareness in smart audio devices exemplifies sensor fusion enabling sophisticated functionality. Voice assistants receiving spoken queries must distinguish between intentional commands and background conversation. A microphone alone cannot solve this problem—human speech and background noise both produce audio signals. Sensor fusion combining audio with motion sensors (accelerometers detecting if device is picked up, proximity sensors indicating whether device is near a user) provides context enabling the assistant to recognize intentional activation versus accidental triggering.

Context-aware systems leverage multi-sensor fusion to derive high-level information from low-level measurements:

- Activity recognition: Accelerometer + gyroscope data fused through machine learning identifies whether users are walking, running, climbing stairs, or sitting, achieving 94-95% accuracy

- Location understanding: GPS + cellular signal strength + WiFi SSID fusion determines not just geographic location but contextual location (indoors/outdoors, in specific buildings), achieving near-perfect discrimination

- Environmental assessment: Temperature + humidity + CO₂ + particulate matter fusion assesses not just atmospheric conditions but occupant comfort and safety

Why Single Sensors Fail: The Fundamental Limitations

Understanding sensor fusion requires appreciating why individual sensors are inherently inadequate for complex applications:

Sensor bias and systematic error: Every sensor has built-in inaccuracy—temperature sensors systematically read 1-2 degrees high/low, pressure sensors have zero offsets, accelerometers include gravitational components requiring separation from motion. These errors remain invisible in a single sensor, appearing as ground truth rather than inaccuracy.

Sensor drift and calibration: Sensor performance degrades over time due to component aging, thermal effects, mechanical stress, and chemical changes (particularly in chemical sensors). A single sensor exhibiting drift cannot self-correct—the drift is interpreted as environmental change rather than sensor degradation. Sensor fusion enables drift detection through cross-sensor validation: when one sensor’s reading diverges from correlated sensors while environmental conditions suggest they should align, drift is indicated.

Environmental sensitivity and noise: Sensors are affected by environmental factors beyond their measurement domain. Temperature affects pressure sensors; humidity affects accelerometers; electromagnetic interference affects magnetic compasses. Single sensors cannot compensate for these interference effects; fused sensors enable environmental deconvolution.

Limited information: A camera sees light wavelengths but not infrared; LiDAR penetrates rain while cameras don’t; radar detects velocity but not shape. Each sensor captures only partial information. Fusion across different modalities provides comprehensive understanding impossible with single sensors.

Incomplete feature space: Autonomous vehicles cannot determine whether an object is moving through 2D image analysis alone; adding velocity information from LiDAR and radar provides the critical temporal dimension enabling motion prediction.

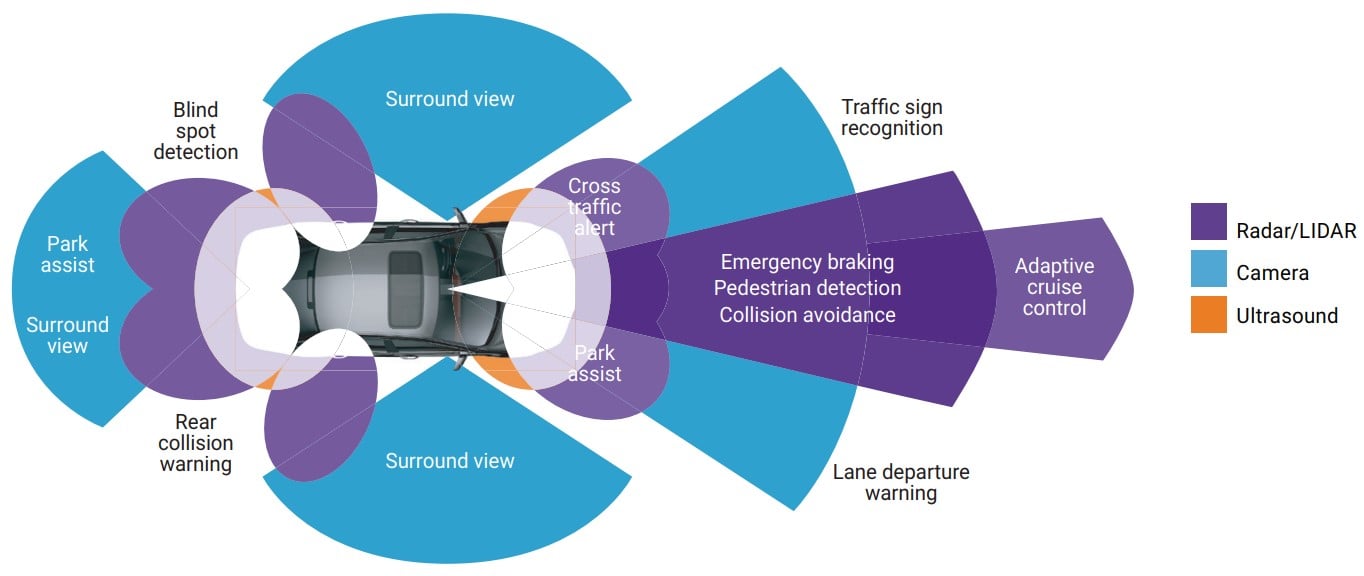

Sensor Fusion for Autonomous Vehicles: Critical Infrastructure

Autonomous vehicles represent perhaps the most demanding sensor fusion application, combining multiple sensor modalities operating across different physical principles, all fused in real time to guide split-second safety decisions.

Multi-Sensor Architecture:

- GNSS (GPS) provides absolute geographic position (1-3 meter accuracy)

- Inertial Measurement Unit (IMU) measures acceleration and rotation continuously, providing high-frequency orientation and relative positioning

- LiDAR (Light Detection and Ranging) provides precise 3D geometry of surroundings

- Cameras provide visual context, traffic signs, lane markings, semantic understanding

- Radar provides long-range detection and velocity measurement

The Fusion Challenge: Each sensor has distinct strengths and weaknesses:

- GNSS provides absolute reference but loses signal in tunnels, urban canyons, under overpasses

- IMU provides continuous high-frequency updates but drifts significantly over time

- LiDAR provides precise 3D geometry but fails in heavy rain/snow; computationally intensive

- Cameras provide semantic understanding but fail in darkness; affected by reflections and transparency

- Radar provides velocity measurement and weather-robustness but lower resolution

Advanced Fusion Solutions: Automotive engineers employ Extended Kalman Filters (EKF) and Unscented Kalman Filters (UKF) to fuse GNSS/IMU data, achieving continuous reliable positioning even during GNSS outages. When GNSS signal is lost (tunnel), IMU maintains accurate position through dead-reckoning. When GNSS reacquires signal, Kalman filter smoothly transitions back to absolute reference, correcting accumulated IMU drift.

More sophisticated systems employ consensus-based distributed Kalman filters fusing GNSS/IMU/LiDAR simultaneously. When GNSS is blocked and LiDAR map-matching fails, the system relies more heavily on IMU while reducing confidence in other measurements. This adaptive approach maintains 17% improvement in localization accuracy over traditional GNSS/INS fusion when one sensor modality fails.

Industrial IoT: Predictive Maintenance Through Multi-Sensor Fusion

Manufacturing predictive maintenance represents another critical sensor fusion application, where early failure detection prevents catastrophic downtime and safety hazards.

Traditional vs. Sensor Fusion Approaches:

Traditional equipment monitoring relies on single parameters: vibration sensors detect bearing problems, temperature sensors indicate thermal issues, acoustic sensors detect developing faults. Each operates independently with thresholds triggering maintenance alerts when exceeded.

Sensor fusion dramatically improves failure prediction accuracy. Rather than analyzing vibration independently, fusion combines vibration data with temperature, pressure, speed, electrical current, and acoustic data. A bearing exhibiting elevated vibration combined with rising temperature, increasing acoustic emissions, and elevated electrical current signatures indicates imminent failure far more reliably than any single indicator.

Murata’s research demonstrates this principle in smart factories: equipment monitored through single sensors achieves 15-25% predictive accuracy, identifying failures only weeks before occurrence. Multi-sensor fusion achieves 90-95% predictive accuracy, identifying failures 2-4 weeks in advance with high confidence. This 3-4x accuracy improvement translates directly to elimination of unplanned maintenance (where equipment fails during production) through replacement of planned maintenance scheduled before failures occur.

Healthcare: From Vital Signs to Comprehensive Health Understanding

Wearable health monitoring exemplifies sensor fusion transforming healthcare from episodic (clinical visits measuring blood pressure, heart rate at a moment in time) to continuous (monitoring these parameters throughout daily life, recognizing patterns and anomalies).

Single-Sensor Limitations:

- Heart rate monitors without activity context cannot distinguish normal exercise response from dangerous arrhythmia

- SpO₂ (blood oxygen) sensors without respiratory data cannot detect apnea events

- ECG monitors without motion data cannot distinguish movement artifact from genuine cardiac abnormality

Multi-Sensor Fusion Solutions:

- ECG + PPG (photoplethysmogram) + accelerometer: Detects heart rate, respiration rate, distinguishes genuine arrhythmias from motion artifact

- ECG + multiple PPG channels: Fused through machine learning achieves 95%+ accuracy in atrial fibrillation detection

- Accelerometer + gyroscope + pressure sensor: Detects gait patterns distinguishing normal walking from Parkinson’s disease-affected gait with 90%+ accuracy

Research demonstrates that sensor fusion improves human activity recognition accuracy from 60-75% using single sensors to 94-95% using fused multi-sensor data. This improvement enables applications like fall detection in elderly populations (distinguishing actual falls from normal sitting movements) and workout classification (differentiating between walking, running, cycling based on acceleration patterns).

Sensor Fusion Algorithms: The Intelligence Layer

Raw sensor data alone doesn’t create understanding—algorithms must intelligently synthesize multi-modal information. Different algorithms suit different application requirements:

Kalman Filter and Extended Kalman Filter (EKF) remain the most widely deployed sensor fusion algorithms due to their optimal performance on linear systems with Gaussian noise. The Kalman filter operates through predict-correct cycles: predict the next state based on physics models (a moving object should travel distance = velocity × time); correct predictions based on sensor measurements. The algorithm mathematically computes optimal corrections (minimum mean square error) weighting predictions and measurements based on their respective uncertainties. EKF extends Kalman filtering to mildly non-linear systems through Taylor series linearization.

Particle Filters handle strongly non-linear systems where EKF fails. Rather than assuming distributions are Gaussian, particle filters represent posteriors as weighted collections of particles (potential states). When measurements arrive, particles are weighted based on measurement likelihood and resampled, creating new populations centered on high-likelihood states. Particle filters achieve 85-95% accuracy on complex scenarios where EKF only achieves 60-75%, but require 100-1000x more computation, making them practical only with sufficient computational resources.

Deep Neural Networks increasingly dominate sensor fusion for perception tasks (object detection, semantic understanding) due to their ability to learn complex patterns from data. Multi-stream neural networks process sensor-specific inputs (LiDAR point clouds through specialized layers, camera images through CNNs) through separate processing streams, fusing at intermediate layers, then generating final predictions. Recent advances enabling neural networks to run on edge devices (microcontrollers, embedded GPUs) have democratized deployment of deep learning-based sensor fusion.

Bayesian Networks provide interpretable probabilistic models capturing complex sensor dependencies. Rather than black-box neural networks, Bayesian networks explicitly represent which sensors influence which variables, enabling diagnosticians to understand why systems reach conclusions.

Architectural Patterns: From Centralized to Distributed

How sensor data flows through fusion architectures fundamentally affects system properties:

Centralized Fusion transmits all raw sensor data to a central processor performing all fusion computations. This simplifies fusion algorithms (single optimizer can achieve globally optimal fusion) but creates bandwidth bottlenecks—thousands of sensors transmitting continuous data consume network resources dramatically.

Distributed Fusion processes sensor data locally (at sensors or edge nodes), transmitting only processed results to central systems. This dramatically reduces bandwidth (a sensor detecting anomalies transmits alert rather than continuous time-series data) and enables real-time edge processing. However, distributed fusion introduces complexity: local processors must be synchronized, algorithms become more complex, and system coordination becomes challenging at scale.

Hierarchical Fusion organizes systems in pyramidal structures: thousands of edge sensors feed local edge nodes; edge nodes aggregate to regional hubs; hubs connect to enterprise systems. Each level performs appropriate fusion for its scale—edge nodes handle microsecond-latency decisions, regional hubs perform multi-minute analytics, enterprise systems conduct strategic analysis.

Implementation Challenges: Turning Theory into Practice

Moving from sensor fusion concepts to production deployments exposes critical challenges:

Calibration and Synchronization: Sensors must be individually calibrated against known reference standards, and when multiple sensors are combined, cross-calibration ensures measurements align properly. Time synchronization is critical: if GPS timestamps sensor measurements 100ms differently than IMU, fusion will be severely degraded. Sophisticated systems employ hardware-level synchronization and timestamp correction.

Sensor Drift and Degradation: Over months and years, sensor outputs drift due to component aging, thermal effects, and environmental poisoning (particularly chemical sensors). Fusion systems must detect and compensate for drift through redundancy and baseline checking—occasionally measuring known reference values to assess sensor accuracy.

Noise and Data Quality: Real sensor data is noisy (corrupted by thermal noise, quantization errors, environmental interference). Fusion algorithms must handle noise robustly—outlier-rejection techniques prevent single bad measurements from corrupting entire estimates.

Computational Constraints: On resource-constrained IoT devices (microcontrollers, wireless sensors), computationally intensive algorithms like particle filters may be infeasible. Designers must select algorithms balancing accuracy against computational budgets.

Privacy and Security: As sensor fusion enables increasingly detailed understanding of users and environments, privacy risks escalate. Sensor data revealing health conditions, behavioral patterns, or facility configurations requires careful protection.

Future Directions: Quantum-Classical Hybrid and Neuromorphic Fusion

Emerging technologies promise to transform sensor fusion capabilities:

Quantum Computing Integration: Quantum algorithms could dramatically accelerate sensor fusion computations for certain problem classes. Quantum machine learning could optimize sensor weighting; quantum simulation could handle complex multi-modal fusion that classical computers struggle with.

Neuromorphic Computing: Brain-inspired architectures process sensor data through event-driven spiking neural networks, dramatically reducing power consumption compared to traditional neural networks. Each sensor spike triggers computation only when necessary, enabling constant low-power sensor monitoring with computation activating only during significant events.

AI-Enhanced Adaptive Fusion: Machine learning meta-algorithms will learn optimal fusion strategies for specific environments and sensor configurations, dynamically adapting as sensors degrade or environmental conditions change.

Sensor fusion transforms IoT devices from simple data collectors into intelligent systems achieving context awareness impossible through individual sensors. By combining complementary sensor modalities through sophisticated algorithms, organizations gain 90-95% accuracy in predictive maintenance (vs. 15-25% with single sensors), dramatically improved autonomous vehicle safety, comprehensive health monitoring replacing episodic clinical snapshots, and smart environments responding intelligently to occupants and conditions.

The diversity of fusion algorithms—from optimal Kalman filtering for linear systems to flexible particle filters for complex scenarios to powerful deep learning for perception—provides a rich toolkit enabling designers to match algorithms to application requirements. Distributed and hierarchical architectures enable scaling from single devices to continent-spanning IoT networks.

As sensor technology advances, computational capabilities increase, and connectivity improves through 5G, sensor fusion will become not optional optimization but fundamental infrastructure in intelligent IoT systems. Organizations embracing sensor fusion today—selecting appropriate algorithms, architectures, and sensor combinations—build systems that achieve dramatically superior performance in safety, accuracy, efficiency, and autonomous capability compared to systems relying on single sensors or non-fused data streams.